Ethical AI Starts in Unreal Engine

A guide to putting the artist, not the algorithm, back in charge.

Hello everyone,

First, a quick follow-up on our last discussion. The proposed 10-year moratorium on state-level AI regulation, which was part of a larger bill, has been overwhelmingly removed by the Senate with a 99-1 vote. This is a significant development (and relief), and I’ll do my level best to track any policy changes that affect our creative community and keep you updated here.

Now, for today’s main topic…

Unreal Engine: The Heart of an Ethical, Controllable AI Workflow

This week, I want to walk you through a process that achieves two critical goals: it provides a more ethical AI workflow and gives you the kind of creative control that even multi-model AI generators often lack.

The journey starts with Unreal Engine.

If you haven’t dipped your toe into Unreal yet, now is the perfect time. With recent advances in animation and rigging in version 5.6 and incredibly powerful real-time camera controls, the engine's capabilities are on par with many dedicated DCCs you might already use. Blender is also an amazing platform if you prefer but I promise, once you take your first Intro to UE tutorial, you won’t want to work any other way.

I’ll outline two distinct approaches. We'll start with a practical workflow that anyone new to these tools can try, followed by a more advanced method that gets us almost all the way to a fully ethical process.

Approach #1: A Practical, Controllable Starting Point

This first workflow demonstrates how to maintain creative control, even if it requires a small ethical compromise along the way.

Why provide this option? As I have said over and over, I think it’s important to stay curious and educate ourselves about the tools. I chose these tools specifically to ensure that your level of technical experience or the time you have available to learn new processes wouldn’t become obstacles. Learn the tools now with educational intention so that you can be ready when there are many ethical workflows at our fingertips.

Step 1: Building Your Scene (The Ethical Foundation)

Your control begins in Unreal Engine, where the ethics are clear.

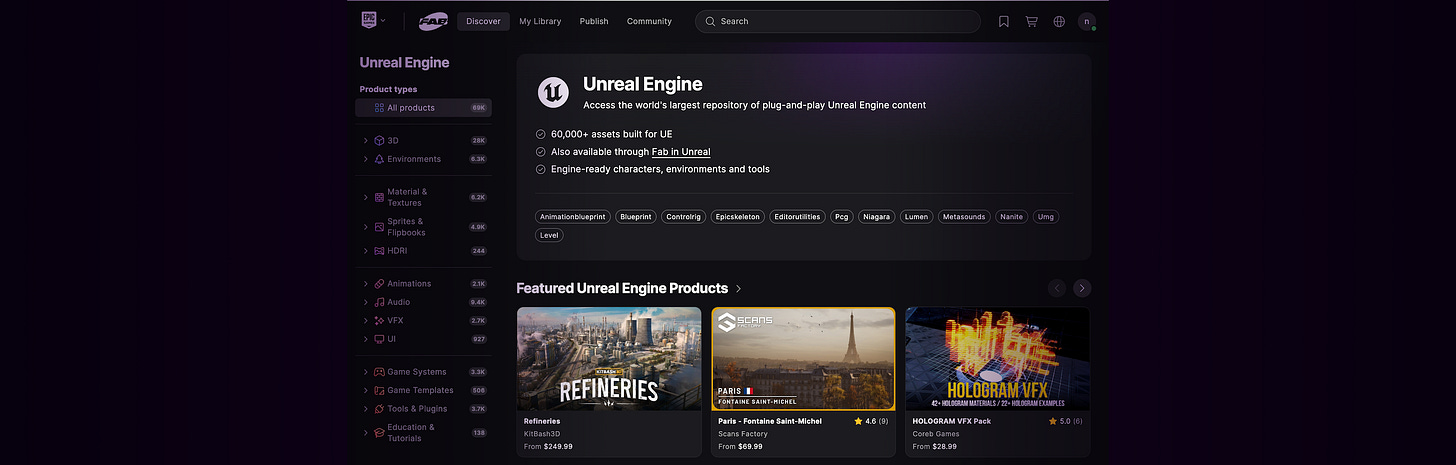

Source Your Assets: You have full access to Fab (formerly the Epic Marketplace), an extensive library where you can license content from individuals and studios with complete transparency on usage rights. Epic also offers a selection of assets on their "free for the month" page, which is updated on the first Tuesday of every month.

Compose Your Shot: For my example, I purchased a cartoon scene and a goblin character from Fab. I then posed the characters and set up two shots in Sequencer. To keep it simple, I skipped animation and mocap and captured a high-resolution screenshot directly from the engine.

Generate Your Style: Next, I used Adobe Firefly to ethically generate style concepts. I prompted content that fell into the genre until I found a composition I could work with. Then, I developed a base prompt that I reused and experimented with different style options for more variation. I landed on three specific styles I wanted to explore further.

At this point, everything is ethically sourced and fully controlled by you.

Step 2: The Style Transfer (The Ethical Compromise):

Now, on to the not-so-ethical part of the process.

I’ve always found that having a first frame with your desired style already applied yields the best overall style transfer results when you are ready for the video2video step of this workflow.

Using the screenshot from Unreal Engine and the three style options from Firefly there are a few options to set up a workflow to transfer those styles onto that single image.

My Recommendation: Use ComfyUI. While you can find many pre-existing workflows on sites like RunComfy, OpenArt, or ThinkDiffusion, I highly recommend finding a tutorial that walks you through building the workflow step-by-step. This will help you truly understand the hows and whys of the process. Learning it "the long way" will serve you well, giving you the control to adjust parameters, which is especially important when your source and style images are extremely different.

Alternative: If you are not comfortable with ComfyUI, you can use other apps. I find that uploading your screenshot and style image into the ChatGPT 4o model that uses DALL-E is pretty effective as well. For that, you would use a simple prompt along the lines of: “Please restyle the first image I uploaded using the second image as style guidance. Ensure that the composition, pose, layout, and size all remain the same.”

After this step, you will have your source image (first frame from UE) with three different styles applied to it.

Step 3: Generating Motion

At this point, you need a video sequence to apply your styles to. You have two clear paths forward, depending on your skillset.

A Practical Shortcut: Since I’m probably one of the worst animators on the planet, I opted for a video generator to save time. I used my Unreal screenshot as the first frame to create an 8-second shot in a tool like Veo3 (cue the hissssss). This gets you a source video quickly.

The Animator's Path: Of course, if you are a rockstar animator or are really good at mocap cleanup, you can take the path of maximum control. Animate your shot directly in Unreal, giving you full command over the performance, and then export your video sequence.

Step 4: The Final Composite

Now that you have your source video and the three styled images that match its first frame, you can perform the final video-to-video style transfer.

My Recommendation: For maximum control over the final look, I again recommend using ComfyUI. While there are many ways to approach this, Arcane Ai Alchemy has a great tutorial for a video-to-video workflow that uses a model called "Wan 2.1 Vace Video2Video," which I've found to be very effective.

Alternative: Runway Gen-3 v2v is very intuitive and can produce great results quickly. You simply upload your video and then your stylized first frame and generate.

The final result of this process is your original, controlled performance, now rendered in three distinct styles. It’s flawed, yes but I wanted to outline the process with the easiest, most accessible approach as possible.

Approach #2: The Gold Standard (A Highly Ethical Workflow)

This second approach is for those who want to get as close as possible to a purely ethical workflow. It requires more effort but gives you unparalleled creative authority.

Step 1: Layout & Animation in Unreal

This is where your ethical journey begins. Animate your shot in Unreal using either hand-keyed animation or purchased mocap data. All assets should be either self-created or licensed from a library like Fab. You have complete control over every element like lighting, materials, FX, and can export your video in real-time. This source video is ethically clean.

Step 2: Ethical Style Ideation

If you have your own style concepts, you're set. If not, use ethical tools like Adobe Firefly or licensed stock image galleries to generate images for your LoRA.

Step 3: LoRA Training

Once you have 40-60 style images, it's time to train your LoRA. Here, you'll again face the challenge of limited ethical base models. You can explore Bria, which is built on licensed data. (Stay tuned for a future newsletter where I’ll compare Bria and Flux using the same dataset!)

Step 4: Video-to-Video (The Final Hurdle)

This is where ethical options for independent creators currently hit a wall. A frame-by-frame style transfer in ComfyUI using your LoRA and ControlNet is an option, but I’ve found it difficult to control.

For better results, I recommend an AnimateDiff workflow. While it may involve ethically gray components, it offers superior adherence to your source video. At this stage, you can feel proud that you have been as ethically intentional as possible. Your goal was to understand the tools and navigate them responsibly, and you've achieved that.

And if it isn’t obvious by now…

If you're an animator or skilled with mocap, you can skip the AI video generator entirely and animate your shot in Unreal. This is where your creative control truly comes into play.

You can apply every principle of animation with your unique signature style, controlling the performance, layout, and lighting. In this workflow, AI does the heavy lifting of that one pesky part we all loathe… waiting for renders! Many tools even allow you to add render passes to your video-to-video workflow for further refinement in comp.

This is total creative control, driven by ethical intention.

Great walkthrough and one that will get more and more robust when even more ethically sourced models become the norm.

Insightful Nikki!