Following up on last week’s newsletter, I wanted to put theory into practice. I set out to create a short film using a workflow that was as ethical and accessible as possible (with some constraints), documenting the entire process along the way.

The result is a short project I’m calling "Slow News Day."

…Now, could that have been better? Absolutely! And are there far more visionary artists who could do better? Huh-YUP! But that wasn’t the point of this exercise. The point is to walk through a process with less stress. I completely understand how tricky it is to embrace a technology that’s suddenly ubiquitous and so controversial. Hopefully this helps…

The Concept: A Solution Born from Limitations

I chose my tools first based on two key points: their ethical transparency and practical usability, given my two-day timeline. I skipped training any LoRAs or ComfyUI workflows to keep this process beginner-friendly.

Character consistency was tricky, so I decided on a simple approach…Each news anchor or reporter gets their own single shot. While some tools can solve consistency better, exploration wasn’t my goal; careful, ethical selection was.

The idea itself was inspired by pre-9/11 news reporting, where even small stories felt urgent. With today’s intense news landscape, I deeply miss that simplicity.

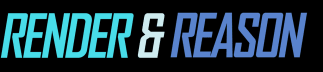

As always, I did a shot breakdown. If you’re using Notion or Google Sheets and want my custom template, just ask. Trust me, detailed breakdowns save tons of editing later.

The Ethical Toolkit Breakdown:

Here is a detailed look at every tool used in the project.

Adobe Firefly Boards (Beta)

How I used it: I used this for a quick mood board to help with ideation.

Adobe Firefly

Why I chose it: I chose Firefly because I am a longtime user of Adobe's suite of tools and was eager to try their new video generation tool. I also selected it due to its transparency around its ethical datasets.

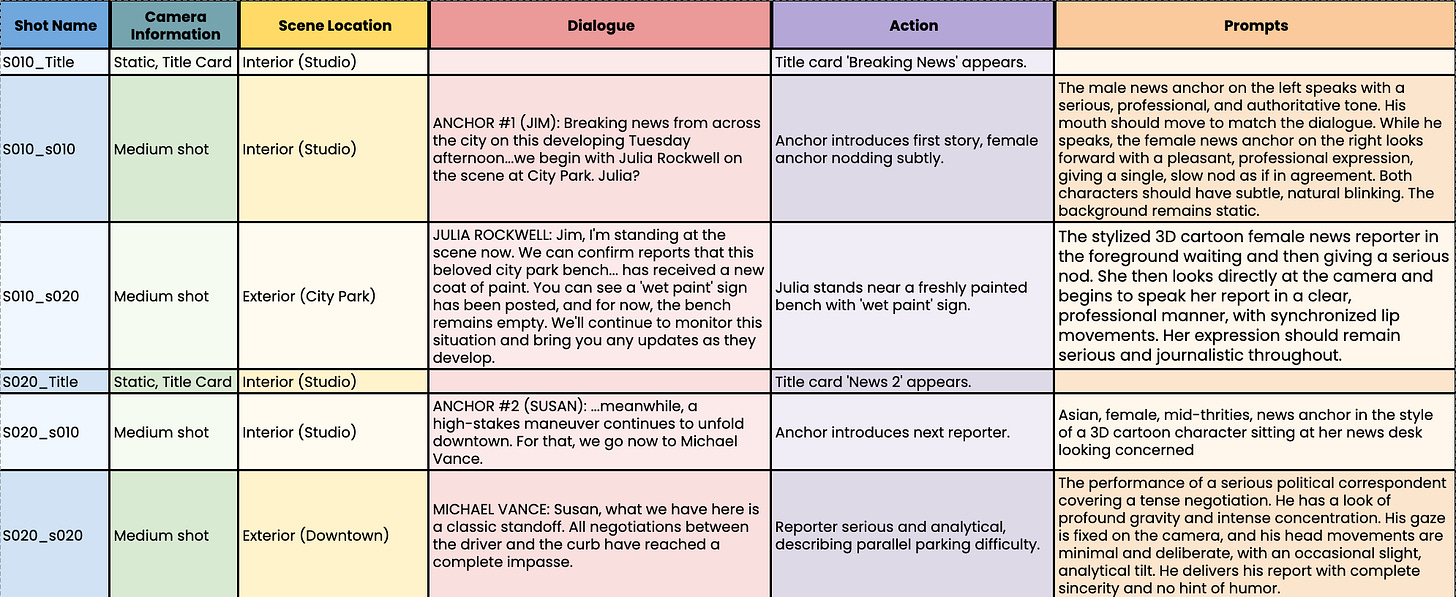

How I used it: I selected the Firefly Image 4 ultra model, set my aspect ratio to 16:9, and set the content type to Art.

For these first few generations, I kept every other parameter at its defaults and hyper-focused on what it would take in my prompt to get the visual results I was after. This can really help with your next shot images.

Here is an example showing the results of one of my first prompts using the “3D” theme Effect versus a more refined and detailed prompt with no effects.

“Female reporter in a park, serious expression, holding microphone with a news 5 logo, wearing professional clothing.”

“A stylized 3D adult cartoon character of a female news reporter. Late twenties, dark brown hair. She has a serious and concerned expression. She wears a smart, professional blue blazer and has a neat hairstyle. She is standing slightly off to the side on a sunny day in a city park, holding a modern broadcast microphone with the “Channel 5 Action News logo on the mic flag”. In the slightly out-of-focus background, a single freshly painted, red, park bench with a small 'Wet Paint' sign is visible. The style is clean 3D animation with bold lines and colors”

I also relied heavily on “Generate Similar” when an inference produced something close but not exact until I found the right one:

I jumped into Photoshop when I couldn’t get something quite right in Firefly. I used it to make changes like removing unwanted elements and correcting details the AI misinterpreted. For adding new elements, like the "wet paint" sign, I used a digital paint approach.

What worked well: Firefly was a solid image generator, and I had a good overall prompting experience with it once my parameters were tuned properly. I also loved the parameter options available.

What didn’t work well: The Image to Video feature lacked the control I needed for the lip sync. The animation also did not follow my prompt for insert shots. It seemed to perform better with Text-to-Video.

Transparency: It is well-documented that Firefly was ethically trained on its "commercially safe" Adobe Stock library. However, it is also well-documented that this same dataset included AI-generated images from competing platforms. To me, this ethical line is grey. My question would be, in light of this claim, is Adobe making any efforts to revise their model audits to detect, remove and retrain these offenders IF they are identified?

I chose Adobe Express to quickly create animated title cards by customizing its pre-made "Breaking News" templates. Its dataset, like Firefly’s, comes from Adobe’s own stock images.

Eleven Labs

Why I chose it: I chose this because it had one of the most flexible, high-quality audio options.

How I used it: I recorded myself speaking, uploaded my recording, and re-generated my audio for the final result. I also used ElevenLabs for my sound effects and music, as cost was a consideration.

What worked well: The options were plentiful and the results were great for this test.

What didn’t work well: It was clear that most of the voice options were male or male-leaning. Some of the sound effects were quite strange and often took several reruns of the same prompt to get something usable.

Transparency: Though the broad message is that the voice datasets are ethical, there is a lot of ambiguity surrounding the training data for their AI music generator.

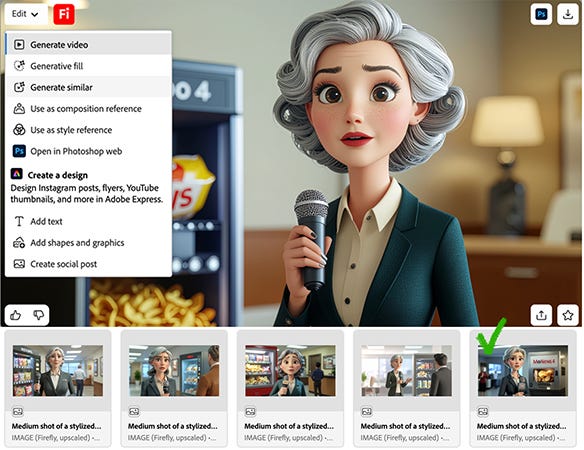

Hedra

Why I chose it: Choosing a halfway-decent, verifiably ethical video generator is difficult. Based on firsthand experience, I knew it had one of the better lip-sync outputs for stylized characters.

How I used it: I uploaded my first frame, my audio, and provided prompts based on performance. Hedra seems to have issues when prompts include specifics about the background motion.

What worked well: Being able to upload my own audio made it easy to keep the lip sync in the pacing I wanted. Some of the subtle animations, like head nods, were good.

What didn’t work well: While some moments were decent, most were not. The performance quality did not meet the bar set by real humans. I also felt like the textures were often buzzing, tiled, and swimming. There were obvious hallucinations, and Hedra didn’t always adhere to my first frame.

Transparency: Though Hedra’s founders have made public statements about being trained ethically, as of June 2025, Hedra has not published any formal transparency reports. For me, the continued use of Hedra feels problematic.

Veo3

Why I chose it: I chose Veo3 for the two insert shots because it was one of the most predictable of the video generators with audio.

How I used it: I used Veo3’s frame-to-video feature and refined my prompts with Gemini.

What worked well: Of all the video generator options with audio, this was the most predictably good for this specific project.

What didn’t work well: It took several iterations, and I still had to trim the odd parts of my video or audio in the final cut. The user experience on the “discounted subscription” felt like I had paid to be an alpha tester, and I have not been able to make usable short film tests with dialogue and audio in Veo3 yet.

Transparency: As of June 2025, Google has not publicly disclosed the specific composition of the video, audio, and text data it was trained on. This makes the continued use of Veo3 for anything but exploratory edification ethically problematic.

Final Takeaways and Recommendations

There are a lot of tools out there for you to test, and I recommend testing them. For paid projects that must be transparently and traceably ethical, I would highly recommend the following:

For Image Generation: Firefly and other apps in the Adobe suite.

For Dialogue: ElevenLabs, where you upload your own voice and have AI generate a new output.

For Music: SOUNDRAW is an incredible option, as its training data was created by in-house musicians and composers.

For Video Generation: If you are comfortable animating, give Adobe Character Animator, Reallusion Cartoon Animator, or Open Toonz a try. These are ethical animation tools that allow you to input your AI-generated image and animate inside the app.

I hope this was helpful in any way for those of you trying to either dip your toe into AI ethically or generate income ethically.

Your thoughts, suggestions, disagreements, and questions are all valued. Keep them coming!

Super insightful, thanks so much for sharing your workflow, and the reasons behind your choices!!